Jongwon Lee

Email /

CV

I am a Ph.D. candidate at the University of Illinois Urbana-Champaign, advised by Dr. Tim Bretl. I graduated Summa Cum Laude, ranking first in my department, from the Korea Advanced Institute of Science and Technology (KAIST) in 2020, under the guidance of Dr. Ayoung Kim for my Bachelor's thesis. I've interned at leading institutions, including Amazon Robotics, Google AR, Naver Labs, and the Electronics and Telecommunications Research Institute (ETRI). My research interests extend beyond my Ph.D. focus on state estimation in robotics — perception, navigation, and sensor fusion — to broader fields such as computer vision, spatial AI, and machine intelligence. I am also exploring diverse application areas, including robotics, extended reality, autonomous driving, and air mobility. Note: I am currently on the job market, expecting to start working in 2026. Please feel free to reach out if you are interested in working with me, hiring me, or if you know of a position that would be a great fit. |

|

ResearchThese include my published works in robotics, automation, state estimation, and optimization. |

|

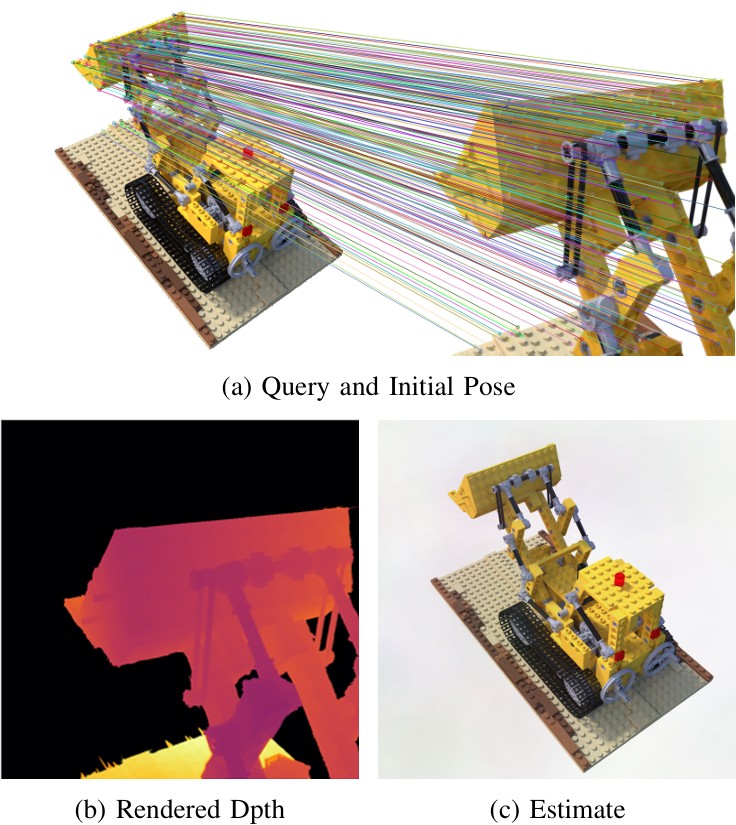

GSFeatLoc: Visual Localization Using Feature Correspondence on 3D Gaussian SplattingJongwon Lee and Timothy Bretl Under Review, 2025 [arxiv] We introduce a visual localization method on 3D Gaussian splatting using feature correspondence, achieving much faster inference time (from ~0.1s to >10s per image) than photometric loss–based baselines. |

|

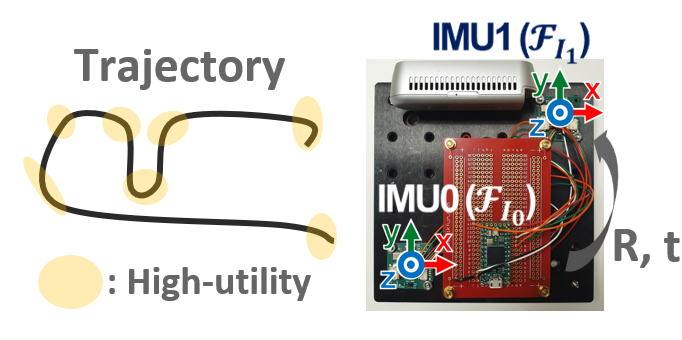

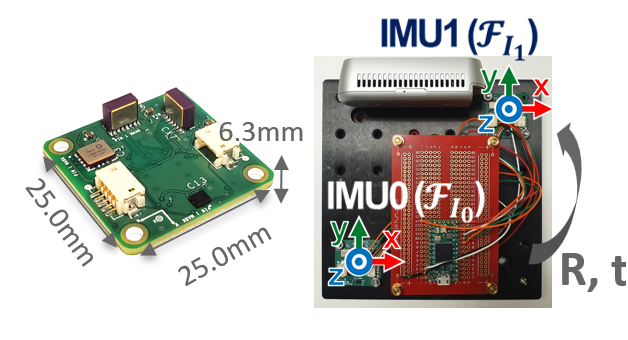

Efficient Extrinsic Self-Calibration of Multiple IMUs Using Measurement Subset SelectionJongwon Lee, David Hanley, Timothy Bretl IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024 [paper] [arxiv] [poster] [slides] We introduce a fast, efficient method for multi-IMU self-calibration by leveraging only the most informative measurements. |

|

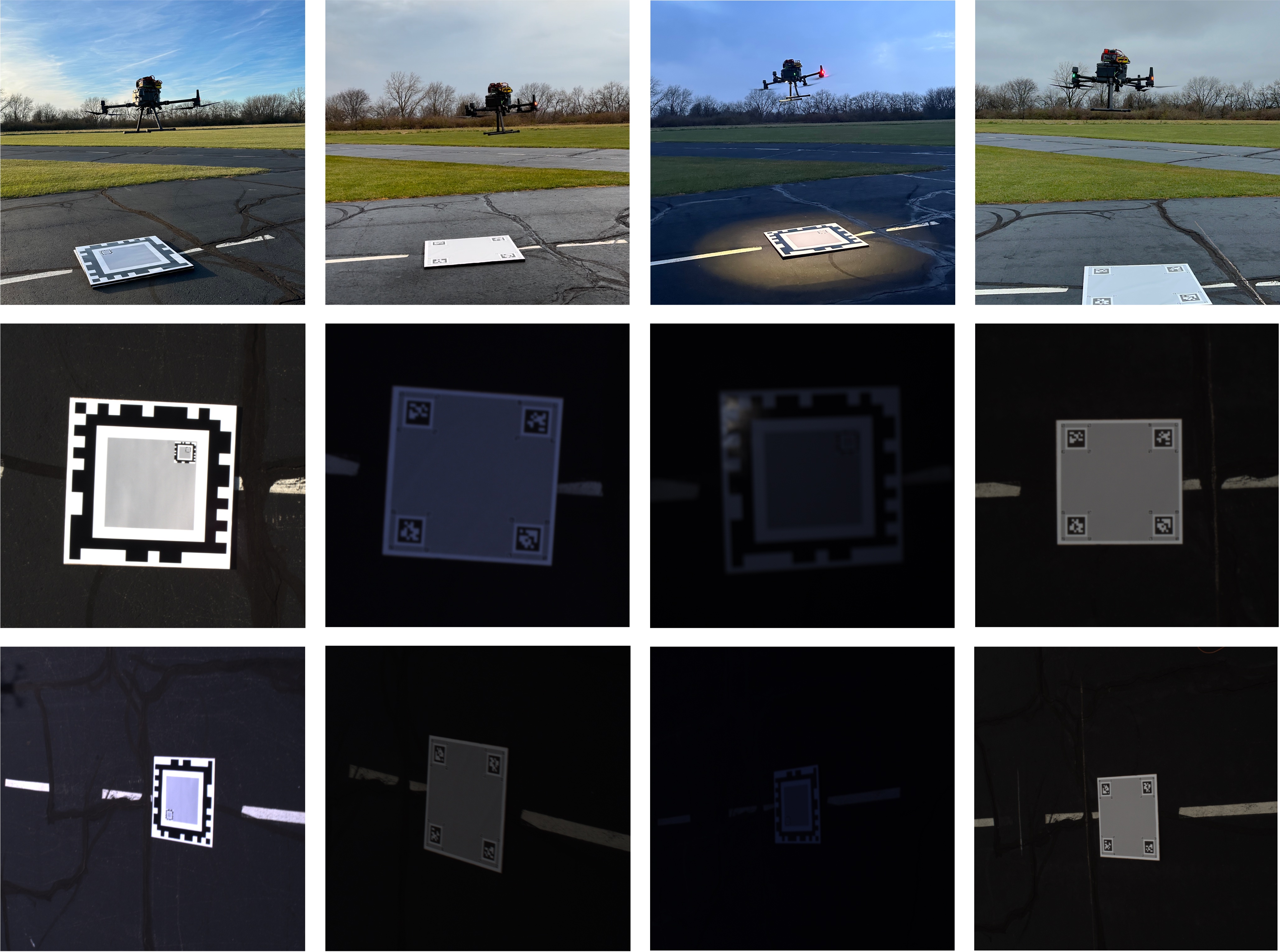

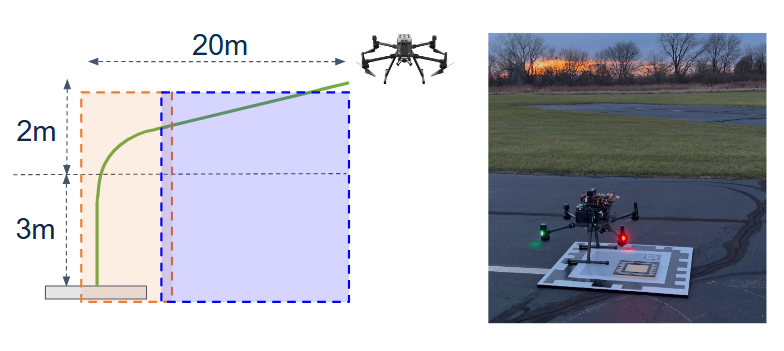

The Impact of Adverse Environmental Conditions on Fiducial Marker Detection from RotorcraftSu Yeon Choi, Jongwon Lee, Timothy Bretl AIAA SciTech Forum, 2024 [paper] We evaluate the detection and pose estimation of multi-scale fiducial markers under diverse weather conditions for rotorcraft navigation during takeoff and landing. |

|

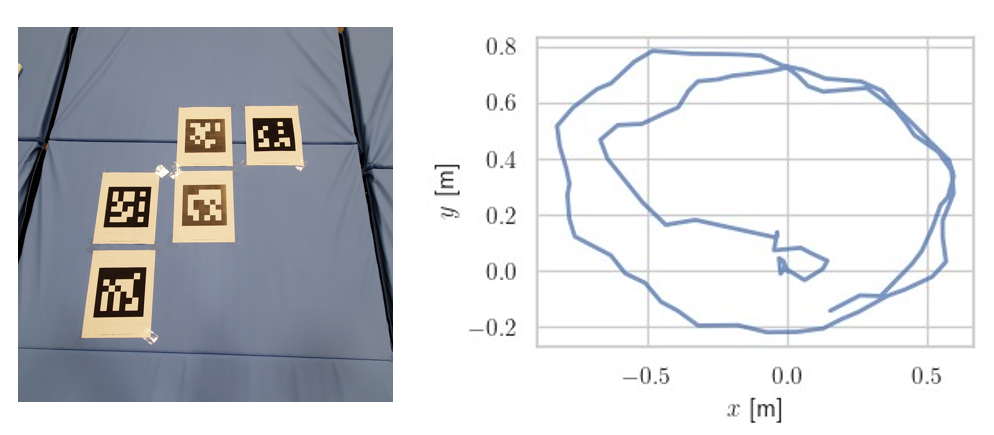

The Use of Multi-Scale Fiducial Markers to Aid Rotorcraft NavigationJongwon Lee, Su Yeon Choi, Timothy Bretl AIAA SciTech Forum, 2024 [paper] [arxiv] [slides] We demonstrate visual SLAM with multi-scale fiducial markers for rotorcraft navigation during takeoff and landing. |

|

Comparative Study of Visual SLAM-Based Mobile Robot Localization Using Fiducial MarkersJongwon Lee, Su Yeon Choi, David Hanley, Timothy Bretl IROS Workshop on Closing the Loop on Localization, 2023 [paper] [arxiv] [poster] We evaluate visual SLAM modes with fiducial markers, offering insights into mobile robot localization with and without prior marker maps. |

|

Extrinsic Calibration of Multiple Inertial Sensors From Arbitrary TrajectoriesJongwon Lee, David Hanley, Timothy Bretl IEEE Robotics and Automation Letters (RA-L), 2022 [paper] [arxiv] [code] [poster] [slides] We introduce a multi-IMU self-calibration method that relies solely on measurements collected from arbitrary trajectories, requiring neither prescribed paths nor other aiding sensors (e.g., cameras). |

|

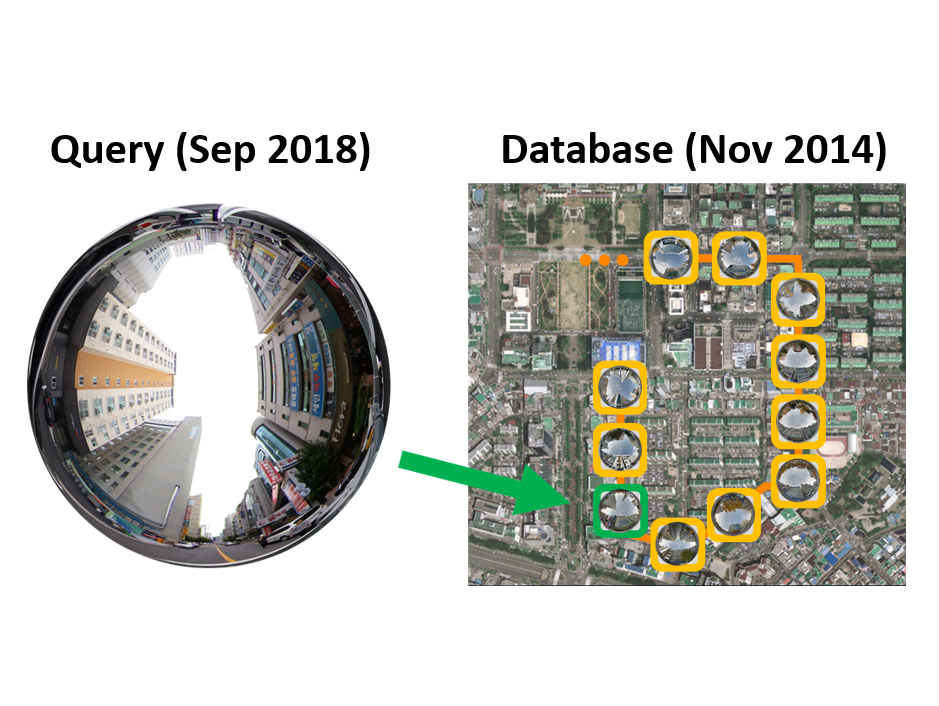

Neural Network-Based Long-Term Place Recognition from Omni-ImagesJongwon Lee, Ayoung Kim IEEE International Conference on Ubiquitous Robots (UR), 2019 [paper] [slides] We present a learning-based image retrieval method using fisheye images for urban environments, addressing scene changes over time. |

Other ProjectsThese include coursework, side projects and unpublished research work. |

|

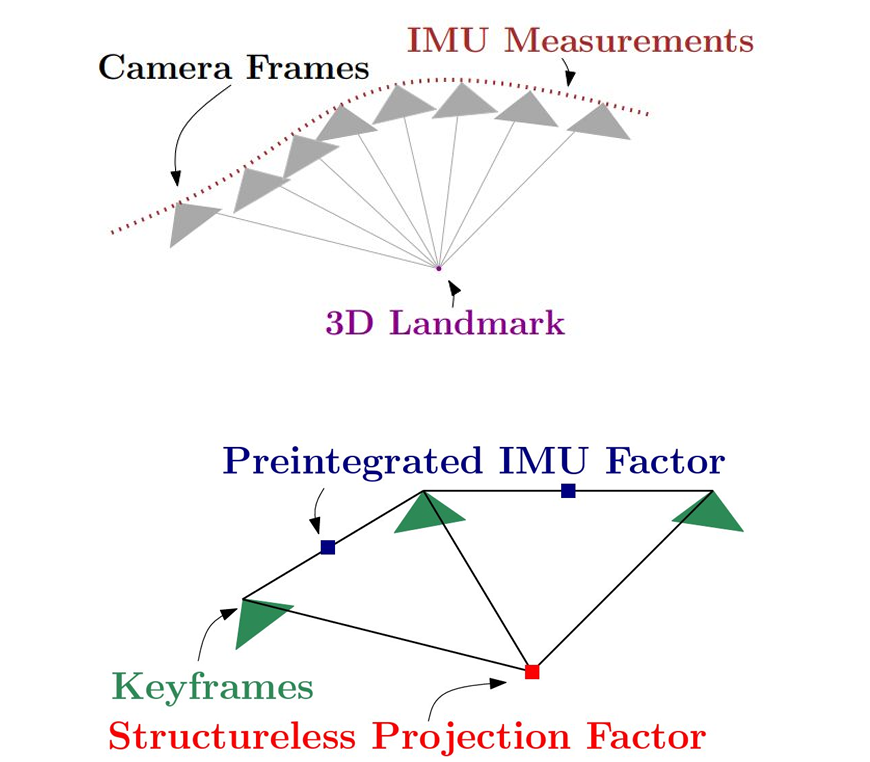

An In-Depth Study on Integrating Inertial Data into Two-View ReconstructionUIUC AE 598: Introduction to 3D Vision (Class Project) Spring 2024 [paper] [code] [slides] Integrating inertial data into two-view reconstruction resolves scale ambiguity in visual odometry while increasing computation time and reprojection error due to conflicting constraints. |

|

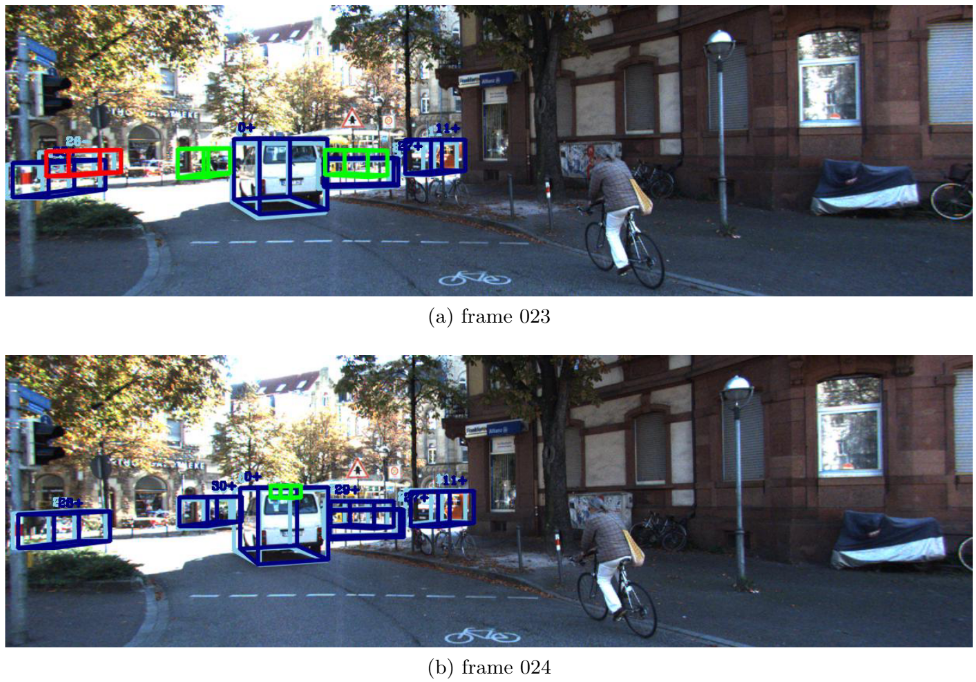

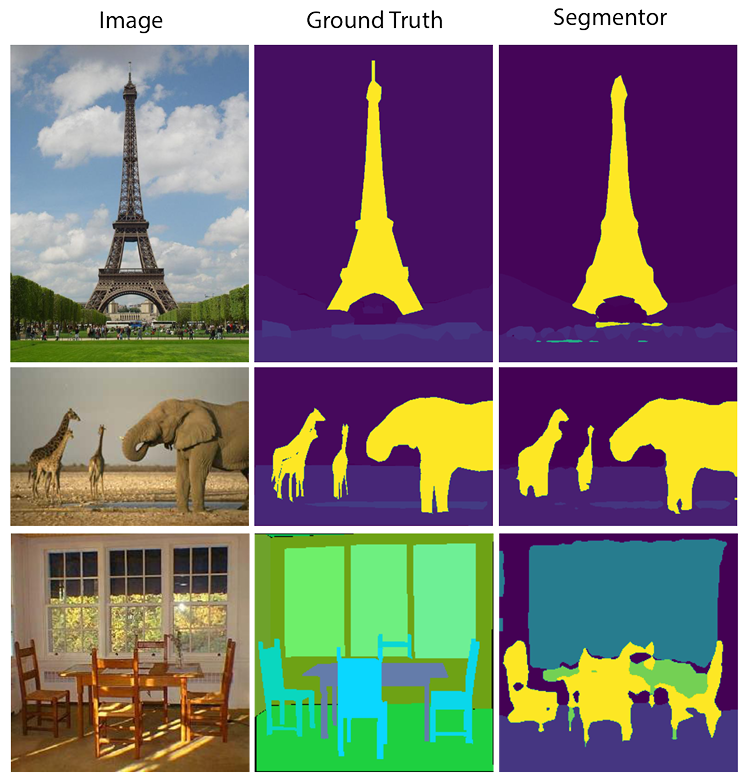

Introduction to Machine PerceptionUIUC CS 498: Introduction to Machine Perception Spring 2022 [website] [code] UIUC CS 498 class assignments on perspective projection, multi-view geometry, camera odometry from depth point cloud data, semantic segmentation, and 3D multi-object tracking. |

|

Convolutional, Transformer-Based, and Contrastive Learning Methods for Semantic SegmentationUIUC CS 498: Introduction to Machine Perception (Class Project) Spring 2022 [paper] [code] We evaluate three key semantic segmentation methods: convolutional, transformer-based, and contrastive learning architectures. |

|

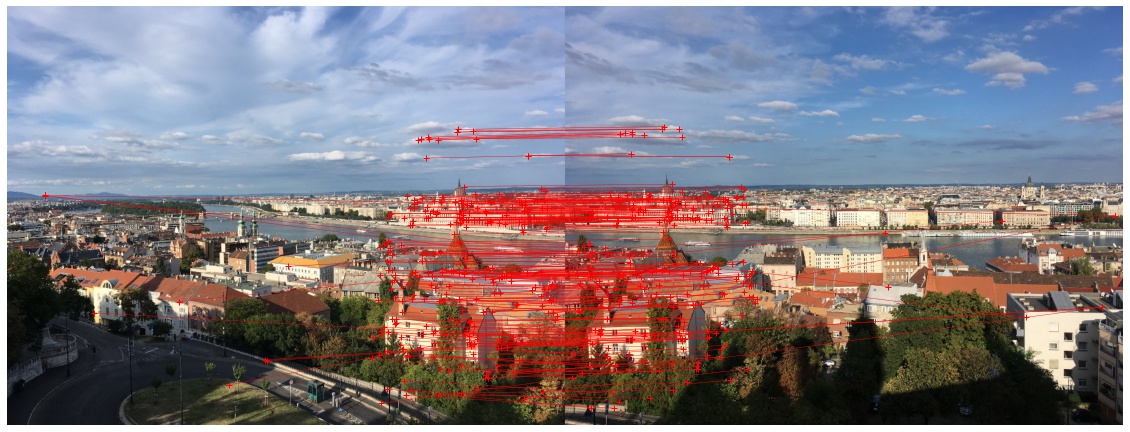

Computer VisionUIUC ECE 549: Computer Vision Spring 2021 [website] [code] UIUC ECE 549 assignments on camera modeling, photography, image processing, geometry, and learning-based image analysis. |

|

Original template from Jon Barron. Jekyll template from Leonid Keselman |